A Journey from Microcontrollers to Microprocessors with AUTOSAR & ROS

- Tobias Stark

- May 11, 2022

- 5 min read

Updated: Feb 13, 2023

Over the last few decades, automotive software has become increasingly centralized. Instead of having a separate microcontroller with software for each vehicle function, modern vehicles are moving to powerful microprocessors where a single chip runs the software functions previously handled by many discrete microcontrollers.

This change in the underlying hardware also changes the automotive software landscape. Microcontrollers were usually extremely resource-constrained and did not have memory management units. They, therefore, ran the OSEK operating system, often as part of an AUTOSAR Classic deployment. OSEK is well-suited to such environments since it incurs little overhead in CPU time and memory footprint.

On the powerful centralized vehicle computers, however, there are better alternatives available. By using commodity POSIX operating systems such as Linux or QNX, developers benefit from virtual memory support and gain access to a vast tooling and software ecosystem. In addition, this enables the use of higher-level frameworks such as ROS and Adaptive AUTOSAR.

Unfortunately, many of those features come at a performance cost. For example, memory isolation significantly increases the cost of context switches and makes it more difficult to communicate between processes without repeatedly copying the message data. Similarly, ROS's approach of modularizing software into independent and reusable nodes can lead to additional communication overhead compared to a monolithic implementation.

Although these costs are usually negligible compared to the increased performance of microprocessors, some common software architectures from the AUTOSAR world may incur large overheads on POSIX systems. We have observed in vehicle programs at several OEMs that such overhead can reach a significant fraction of the actual computational load, negating much of the promised performance benefits of microprocessors and threatening the deployment's viability. Particularly architectures with many small runnables may experience surprising performance degradations if, for example, each runnable on OSEK is ported to run as a separate process on POSIX OS/RTOSes.

In the DATE conference paper “A Middleware Journey from Microcontrollers to Microprocessors,” we describe how we address these challenges in our products Apex.OS*, an ASIL D certified software framework based on ROS 2, and Apex.Middleware*, a publish/subscribe middleware based on Eclipse Cyclone DDS™. The four techniques are as follows:

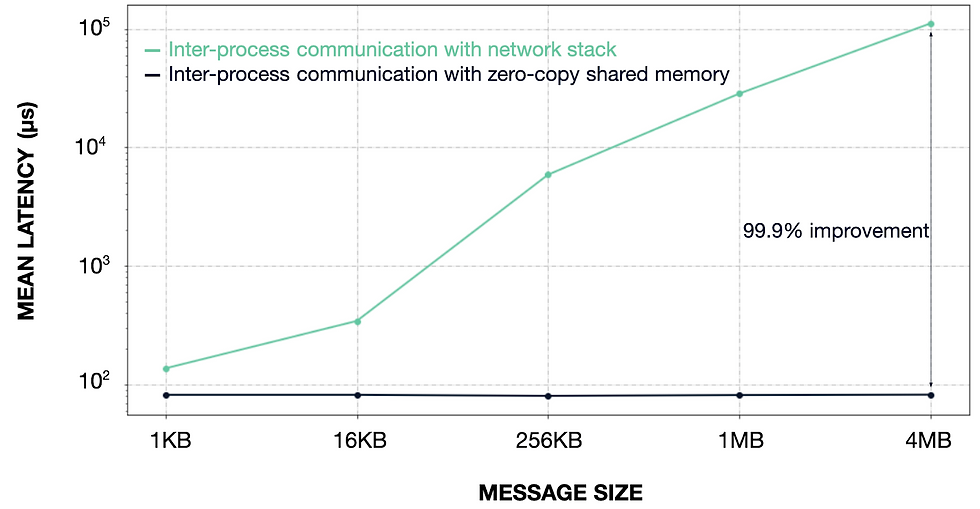

1. Zero-Copy Communication: Apex.Middleware* contains the Eclipse iceoryx™ middleware, which supports zero-copy communication within and across processes. As a result, even large messages can be transmitted at the cost of a simple status message. In our experiments, zero-copy communication reduced the message latency of megabyte-sized messages from tens of milliseconds to less than 100 microseconds (Figure 2). As a result, Apex.Middleware* topics can be used to efficiently share memory-heavy intermediate results, for example, point clouds or camera images. It makes messages a small, known CPU cost regardless of size. And zero-copy greatly reduces jitter, providing deterministic messaging that fits your safety budget.

Figure 2: Zero-copy communication as implemented in Apex.Middleware* reduces communication latency for large messages by multiple orders of magnitude, Source: Apex.AI

2. Executing multiple nodes in a single thread: Both ROS and Apex.OS* structure an application as a collaboration of independent nodes. Each node is a self-contained entity that communicates with the rest of the system through the middleware. This design fosters modularity and code reuse, as nodes can be added, removed, or replaced without affecting the rest of the system.

However, the code encapsulated by such a node can be relatively short and might not justify the overhead of a separate thread. Both ROS and Apex.OS* can therefore multiplex multiple nodes onto a single executor thread (or executor for short).

Executor threads can significantly reduce context switches and threading overhead. If, for example, two consecutive parts of a radar pipeline run in the same executor, the sender writes the data into a middleware buffer, hands it over to the middleware, and returns to the main executor loop. The executor then notices that a message for the receiver arrived and invokes the receiver within the same thread context. Due to the zero-copy middleware, the entire transaction runs without any context switches or message copies.

Figure 3: Improvement observed from running multiple nodes as a pre-declared chain in a single thread, Source: Apex.AI

To reduce the message signaling and executor overhead even further, Apex.OS* skips the wait-set and runs the sender and receiver nodes successively in the same thread. In Figure 3, control flow thus jumps directly from the end of node A to the beginning of node B, without checking the middleware or executing additional executor code. All that is needed for this optimization is that users explicitly declare the processing chain during the executor setup. Such pre-declared chains are similar to the traditional AUTOSAR technique of running multiple runnables on the same OSEK task in a fixed order. In our experiments, this optimization reduced the mean message latency by about 30% (Figure 3).

3. Avoiding context switches in the middleware: In most middleware implementations, receiving a message involves multiple threads. The system thus needs to perform multiple context switches for each middleware message. This can noticeably increase the middleware's message processing latency. Apex.Middleware* avoids this issue by running the message reception logic entirely in the receiver thread, reducing the number of context switches required to receive a message. In our experiments, this optimization reduced the mean message latency by another 20% (Figure 4).

Figure 4: Improvement from avoiding context switches in middleware, Source: Apex.AI

4. Identifying non-triggering topics: A node in a perception pipeline doing sensor fusion typically generates output by processing the input data received from multiple subscribers. However, the node is often triggered only by one specific input, while other inputs are stored and aggregated for later processing. However, frameworks like ROS still activate a callback for these other inputs. Such needless activations are a waste of time and resources.

Figure 5: Supporting non-triggering topics in a sensor fusion pipeline, Source: Apex.AI Apex.OS* avoids this problem by explicitly exposing the middleware queues to the node developer. Instead of being triggered every time a message arrives, the nodes can distinguish between triggering and non-triggering topics. Only a message on a triggering topic activates the node; messages from non-triggering topics remain in the middleware buffers until they are explicitly requested by the node. The observed improvement is comparable to the single thread optimization (Figure 3). This is because changing a topic from triggering to non-triggering avoids the context switches and executor overhead of needlessly triggering the node.

Conclusion

Porting AUTOSAR-style architectures to POSIX systems may lead to surprising

performance degradation due to the cost of isolation and communication overheads. Our products Apex.OS* and Apex.Middleware* reduce this overhead through four techniques: zero-copy communication, fewer context switches in the middleware, multiplexing nodes onto executor threads, and non-triggering subscriptions. This allows users to run even small nodes efficiently and rely on the publish/subscribe mechanism even for large messages and intermediate results.

These improvements are relevant to automotive developers, making it easy to port AUTOSAR-style software architectures to Apex.OS*. Reducing the cost of memory isolation and communication provides AUTOSAR Classic's performance assumptions on POSIX systems. Apex.OS* and Apex.Middleware* thus provide microcontroller-like performance in a microprocessor environment.

If you are interested in Apex.AI products for your projects, Contact Us.

We are also growing our team worldwide. Remote and in our labs. Visit Careers.

*As of January 2023 we renamed our products: Apex.Grace was formerly known as Apex.OS, and Apex.Ida was formerly known as Apex.Middleware.