Advancing Safe Calibration for Automated Driving Sensor Suites

- spleenlab

- Sep 1, 2023

- 2 min read

Updated: May 17, 2024

As the world of automotive technology progresses toward a future of partially and highly automated vehicles, ensuring the utmost safety in these advanced systems becomes paramount. A key factor in achieving this level of safety is the accurate calibration of the sensor suites that power these vehicles' perception capabilities.

The Need for Robust Perception

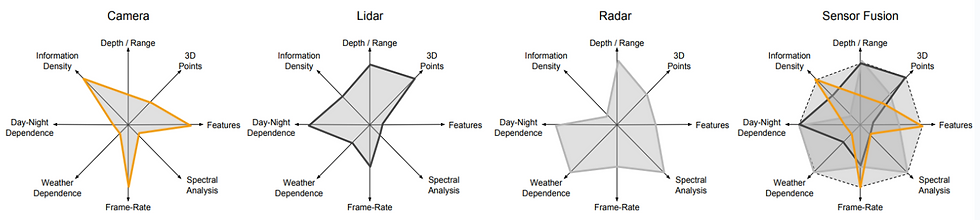

In modern vehicles with many sensors such as cameras, lidars, and radars, redundancy is the foundation for enhancing safety. This redundancy, coupled with multimodal sensor fusion, improves performance and enables robust perception even under varying weather conditions. This is crucial for the success of safety-critical applications. Fig. 1 highlights the significance of sensor fusion, which can compensate for the strengths and weaknesses of individual sensors.

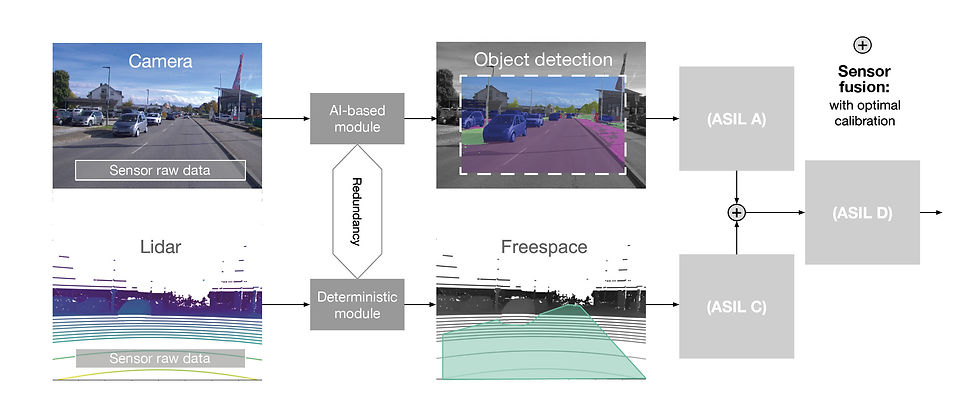

To ensure safety, the industry follows the ISO26262 standards, which introduced the Safety Integrity Levels (SIL) concept. These levels classify the impact of failures, guiding the design of safety-critical systems. However, algorithms across different sensors need not fulfill the same ASIL level, leading to a concept known as ASIL decomposition. This strategy splits highly critical functions into less critical sub-functions, minimizing risks.

The Challenge of Calibration

Sensor fusion, involving different sensor modalities like cameras and lidars, is essential for highly safety-critical functions in automated vehicles. However, the calibration of these sensors is an ongoing challenge due to environmental factors like vibration and collisions that affect extrinsic calibrations. Even a tiny calibration error can lead to catastrophic failures in perception functions.

Addressing Calibration: A Strong Foundation

Recognizing the need for robust calibration, we need a comprehensive approach that combines ASIL decomposition with a strong calibration basis. This combination is represented in Fig. 4, illustrating the importance of a reliable calibration for safe and effective perception in autonomous driving.

Online Calibration: A Paradigm Shift

Traditional methods of extrinsic calibration, often performed once during factory commissioning, need to address the dynamic nature of sensor movement during a vehicle's lifespan. The solution lies in Continuous Online Extrinsic Calibration (COEC), which calibrates sensors in real-time using sensor data. The benefits of COEC are evident in its significantly increased robustness, precision, and accuracy over existing state-of-the-art (SOTA) methods.

Integration and Real-World Demonstration

To validate the effectiveness of the proposed solution, a real-world integration is demonstrated using a sensor suite named "SAM" (Spleenlab Apex.AI Mobil). SAM, equipped with various sensors, including cameras and lidars, showcases COEC in action. With the ability to perform automatic calibration even in challenging scenarios like L5 intersections, COEC proves its practicality and value.

Validating the Approach

The efficacy of COEC is validated through extensive experiments on established datasets, specifically the KITTI dataset and its extension, KITTI-360. COEC consistently outperforms standard methods, achieving accuracy and reliability even surpassing the ground truth. This validation underscores the robustness of the proposed calibration approach.

Conclusion: A Leap Forward in Calibration

As the automotive industry advances toward a future of automated driving, the importance of robust calibration cannot be understated. Combining ASIL decomposition and COEC promises to revolutionize the field of sensor calibration. This approach sets a new standard for safe and efficient sensor fusion in automated vehicles by offering enhanced accuracy, real-time capabilities, and compatibility with various sensor types. With Apex.Grace and VISIONAIRY at the forefront, the path to safer autonomous driving is more apparent than ever.